Ms Compose 95

Briefly

This is a web audio API based experiment, where the user is presented with an array of sequencers. The size of the sequencing buttons is set so it feels ambiguous whether it is a button or a pixel. The user can draw around, which will result in sequences. Each color represents an audio file, which is repeated along many sequencers, this is because each sequencer has it's own timbre, running speed and length. All the speeds and length are powers of two, because the intention is making loops, and 4/4 measure is the easiest to understand.

A good way of getting started is opening the window, and just dragging the cursor once.

Concept

Here I experimented with having multiple references to a same sound, from different sequencers. The interesting point on this kind of interaction is that you can generate fractal patterns without having to explicitly declare each instance of a note.

Fractal patterns

The idea is more easily explained with an example. The most standard, basic pattern of electronic dance music, would be like this:

(N will stand for the step number, in the expressions)

Snare, every 8 beats `N%8==0`

Kick, every four beats `N%4==0`

Hi-hat, every second beat `N%2==0`

This creates a very plain pattern, with no tension; because we are highlighting the strongest measures in a very orthogonal way: if the measure is multiple of a factor of two, it is a strong measure. It is "strongest", the higher factor, this multiplier is from to. In other words,

`N%2^S`

where S is this strength factor.

This can be applied to other measures than 4/4th; replacing the 2 with the beats per measure. For instance a 3/4 measure fractal pattern would be `N%3^S`. We could easily make a sequencer that has only one parameter of beats per measure. A good example of fractal rhythm is the Risset pattern.

Tension

Now, of course that we don't want to create fractal patterns: they are boring. We want to bring some musical tension by setting events in measures that have a low strength, while leaving silent measures that are of the same or more strength, thus creating this sensation of disequilibrium or tension.

In a 16 step sequencer, we would program some sequences that will be very orthogonal (following the %2^s rule), and on top of that, some other sequences that will not respect these rules. The non-orthogonal sequence tension expresses best in contrast to the one that is orthogonal.

Translation into an interface

Most music composition interfaces that I use are designed to avoid redundancy on the interaction. In terms music composition interface design, it would feel absurd to have two different sequencers for one same event trigger. A bit as if a piano would have two keys for one same hammer. On the other hand, I wanted to experiment with an interface that would encourage the user to make fractal patterns, where layers of orthogonal rhythms will overlay layers of less orthogonal rhythms. A big inspiration was the Akira Ost Theme, by the collective Geinō Yamashirogumi

https://www.youtube.com/watch?v=Af5r8ONtacw

This is why each sound control is redundant: for each sample there may be any quantity of sequencers. The point is that each sequencer runs at a different pace, according to the N%2^S formula. This means that for a same timbre, if the user enables all the events in some sequencers, the events would happen on each half measure, on each measure, every two measures, each sixteenth of a measure, etc.

I expect the user to create plenty of events on any session, and as the idea is to encourage through the interface, the control of the sequencer buttons work in a "paintbrush" mode, meaning that the user can drag the mouse and create events throughout all the mouse path, giving a feeling to the use that is similar to the one of MsPaint. This is why I had to opt for building my own user interface snippets: most of the ones I could find don't allow such a detailed level of control over the nuances of interaction. Also as I was working entirely on factors of two (using a 4/4 measure), inspired me to utilize a retro technological aesthetic. Achieving a windows 95 style is easy with CSS properties; thus the windows 95 ornamentation style of the interactive piece.

From the process of making these interactive pieces, I have discovered that the intention of making a clean code is often detrimental to a process of exploration. There are cases where one has a master plan of what to achieve, and knows all the details; in which case the best is to have a big plan, and start making all the prototypes in an orderly fashion. In the case of JavaScript, this would mean to start using clear modules and a work with transpiling pipelines like Gulp and Browserify. The case of this project was quite the opposite: I was not clear where the exploration would lead me, and was not even clear of what technologies I would use. I made many attempts to start the project in this orderly fashion. First of all, the preocupation about the whole structure of the code took attention from the exploration process itself, so often I ended with well structured code that did not achieve anything interesting. It also happened that very well structured code didn't allow me to re-structure something when I felt an sudden intuition. For this exploration process, the technique that worked best for me, was to just start the whole project in a single JavaScript file that I split once it got big, but all the prototypes kept being global. I was careful to create a wrapper function to instance in relation to each abstract or visible entity. Software that abuses of global variables can't get far, as the code becomes unreadable, and their changes become impossible to track.

Design of the sound

The most obvious sound design for rhythmic sequencers, are sounds that would resemble the ones of popular music drums, as it happened with all the commercial sequencers from the eighties. The two problems with this kind of percussion kit, is mostly that there are patters to which we are heavily accustomed to; and this means that by using this kit, our intuition would always take us to imitate these. We even feel aversion to the ones that sound similar but not the same. It feels as if the pattern is trying to mimic other pattern that we are used to hear, but is made "wrong". It is precisely this notion of wrong and right, that I needed to avoid in order to let the players to explore without preconceptions. I would say that I opted for designing a kit of percussion over a kit of drums.

Multiplayer

I also developed two modes of playing collaborative, what I call the "multiplayer mode". The first one assumes that each player is using a similar web browser with mouse, speakers and screen and presents the same interface to each. The interaction from each plater is transmitted to a node server, stored by the server, and then broadcasted to all the clients from the server in a way that each client will listen to the same patterns. The second multiplayer mode assumes that there is a computer displaying the full interface and producing the sounds, while the players would use their cellphones and listen the sounds from the central computer. I designed this mode specifically to show it in the 2016 Dash Festival.

The challenges while designing the first mode of multiplayer were the sync between sequencers. On any client's computer, a sequencer would not run until there is a sequencer programmed into it. This means that each sequencer first step is not constrained to any particular measure multiples. I designed it in this way so there would be more variations in the resulting patterns. This brought the problem that if a pattern was interesting in the browser of one player, if other player connected later, would receive all the patterns at the same time and therefore all the pattern's first steps would happen at the same time, and the resulting pattern would result different in the first player's browser than in latter player's ones. To solve this, I made an absolute count of the beats in the global metronome (at first each sequencer had it's own count of beats from the start of the program), and when any sequencer started, it would calculate a displacement respect to the absolute multiple, and add it to evaluate the step in turn, as a displacement variable.

The second multiplayer mode, the one played with smartphones showed the challenge of relation between the player's sequencer and the resulting sound. Albeit each player had four sequencers that appeared clearly on the screen, had the same colors and responded to the player's input, it was hard for each player to understand how his sequence really affected the resulting pattern. At the end it happened that the player inputs worked mostly as a source of randomness for a stochastic composition (which also happens a lot in the single player version). I think that whilst it is ok that the single player version works stochastically, in the context of social interaction it doesn't make that much sense, because if one can't chose purposefully his result, there is no point to be interacting with the piece rather than just listening to somebody else's composition. Furthermore, if the player can't understand the relation between his interaction and the sound, I could have aswell taken a completely arbitrary source of data for the composition, and the result would have been similar. The only positive aspect in this regard was that the relation between interaction and result (although too hard easy to perceive) was clearly direct and consistent.

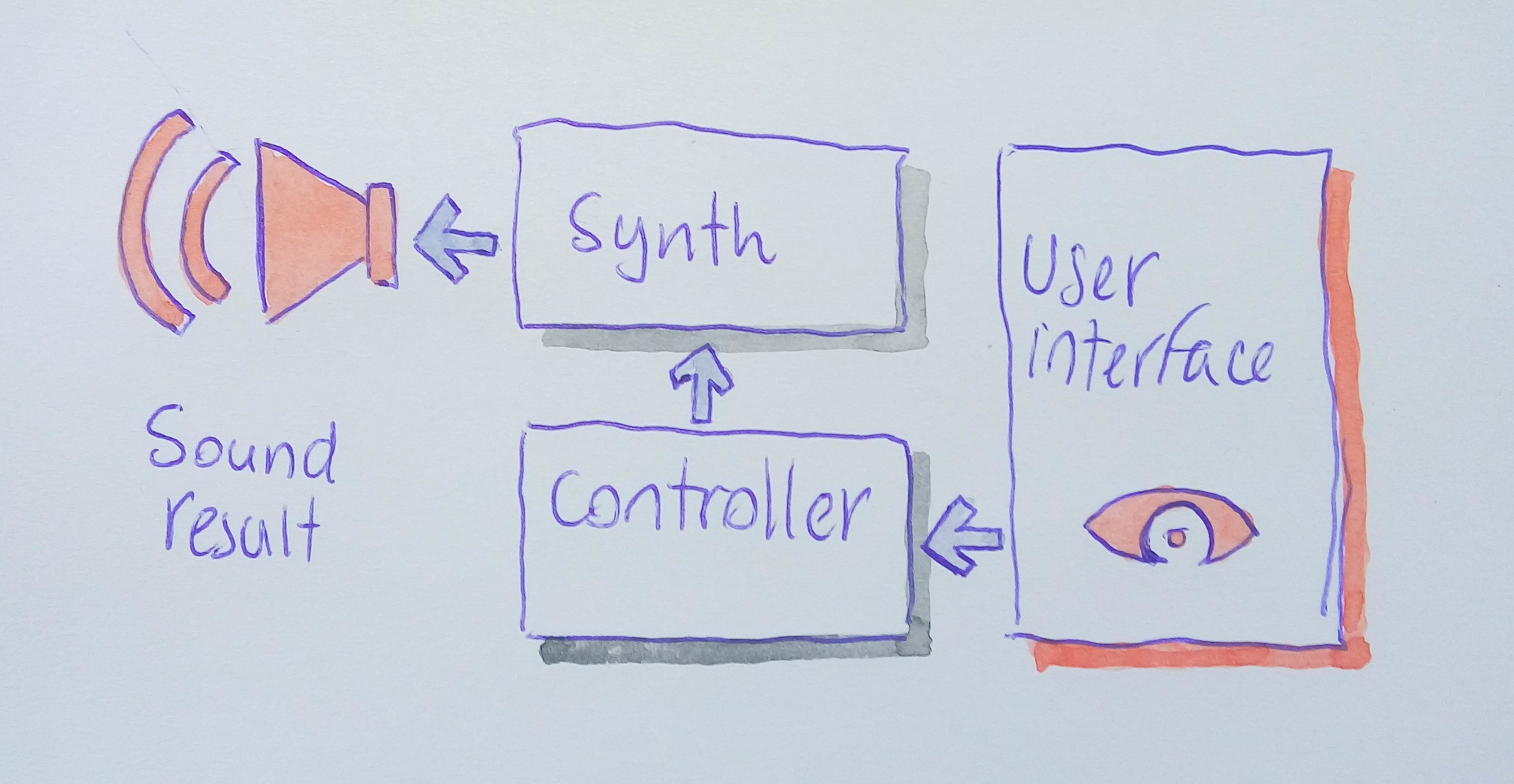

This problem also happens in proffessional music making hardware, when more than one musician plays using many machines, improvising. It may happen that a machine stays playing one same sound for long, and either each performer thinks that the sound is intended by the other, or none of both knows well which machine is producing certain sound. I have learnt through this that is very easy for a sound user interface to reflect the state of the sound controller, or the state of the synthesizer. What is really hard, is that the interface reflects also the actual sonic result from the system synesthetically. I think that for a better version of this idea, each player needs to have

- a very distinct sound to which the effects of interaction are sonically clear (this brings a whole sound expression constrain to the idea of collaborative performances)

- There should also be a limited amount of users interacting with the piece at any given time, so there are few sounds from were to distinguish one own's.

- Over this, there should be a very clear synesthetic visual feedback that relates a certain sound to a certain sound controller.

Technical details

Dependencies

- Tone Js, to handle the sound event triggers, the metronome, and sample loading.

- Nexus ui, specifically to display the wave shapes in the bottom of the screen.

- JQuery, because all the sequencers, buttons and sliders are DOM elements.

- Node, for the case of the multiplayer version

- MsComponents, which is actually not a dependency, but I am working to turn the interaction elements into a library.

Setup

Sequencers

The program starts by creating Tone.Js Samplers. Upon the creation of each sampler, the sliders and wave shape controllers will be created in the window. The sequencers are independent entities from the samplers, as there may be more than one per sampler. A metronome function iterates through each sequencer, and they will trigger a step if the current measure corresponds to the pace of the sequencer.

Each sequencer has a different length and a different pace. The length is the amount of events that it has. The pace is the amount of measures that are needed to advance one event of the sequencer (i.e. a pace of 4 means that there are four measures between each event)

this.len=Math.pow(2,(seqProg%5)+1); //length this.evry=Math.pow(2,(seqProg%4)+1); //pace

Mixer & range selectors

The panel that is presented below have an array of buttons, of vertical faders, and a selector for the start and end points of the loops. The buttons and faders are purely made out of DOM elements, to which many interaction events are attached. The start and endpoint selectors are made out from a Nexus UI library module, and is drawn on a canvas. All these are constructed and created upon load of each sound file.

Production of the sound

The kit was completely made using Native Instrument's Massive synth. I took advantage of a previous work where I designed a drum kit using massive, and changed some parameters from this kit in order to remove as much as possible the association to a drum kit while keeping the different sounds distinct ones from others. This is why the MsCompose kit resembles drum in the sense that there are some bass, oscillator based sounds, while there are some filtered noise-based sounds aswell. The character of the whole kit is granted by the use of a comb filter (a high-feedback delay to which, I suspect, a high resonance low pass is added) and a band-pass filter. I also made a choice for very electronic sounding oscillators such as triangle or square over the natural sound of sines, that is a good fit for the comb filter.